On Monday, OpenAI rolled out its latest innovative technology, Sora, an artificial intelligence (AI) model designed for generating videos. This launch, which comes after a lengthy anticipation marked by delays and previews for select individuals, is particularly noteworthy in the realm of digital content creation. Sora is not just another model; it marks a significant leap in AI capabilities, catering to the growing demand for high-quality video content among digital creators and businesses alike.

Alongside Sora, OpenAI introduced a variant called Sora Turbo. This enhanced version of the original model supports video generation in 1080p resolution, with a maximum duration of 20 seconds per clip. The decision to provide a standalone platform based on user subscription levels reflects OpenAI’s strategy to monetize its offerings while ensuring that they are kept within an ecosystem that prioritizes user experience and safety.

Exclusive Access and Subscription Levels

Currently, Sora is exclusive to subscribers of ChatGPT’s paid plans, namely Plus and Pro users. This approach serves as a filter, allowing only those who are invested in the ChatGPT ecosystem to explore the video generation capabilities. Plus subscribers face limitations such as a cap of 50 videos per month in lower resolutions, whereas Pro subscribers, costing around $200 monthly, enjoy enhanced usage and quality specifications. However, the specifics of these “higher resolutions” and “longer durations” remain vague, leaving potential users curious about what constitutes these upgrades.

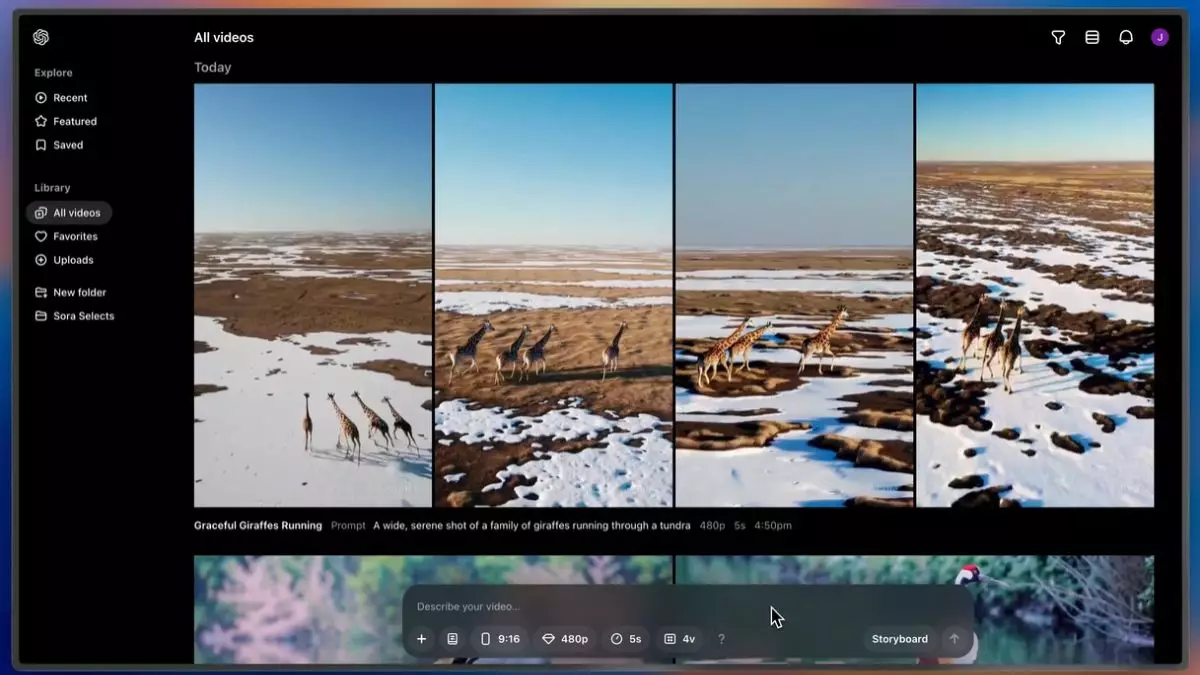

What truly sets Sora apart is its flexibility. Users can generate videos from scratch using text prompts, upload their own media to remix, and leverage a storyboard interface for precise frame control. This level of control opens the door to countless creative possibilities, enabling not just casual users but also professionals to employ the technology in their workflows. The model accommodates various aspect ratios—widescreen, vertical, and square—broadening the appeal and utility for different platforms and audiences.

Delving into Sora’s architecture provides insight into its operational superiority. The model is based on a diffusion framework, which essentially involves analyzing numerous frames simultaneously to maintain consistency throughout the video. By adopting a transformer architecture, Sora benefits from techniques previously utilized in models like DALL-E 3, illustrating how OpenAI is continually refining its technologies by building on successful concepts.

OpenAI’s data sourcing for Sora is broad and multifaceted, including inputs from public datasets, partnerships, and proprietary collections. These efforts point to a commitment to leveraging comprehensive datasets to inform the model’s training. However, the process of collecting data from various sources inevitably raises questions about ethical considerations and the implications of using such data for AI-driven video generation. OpenAI aims to address these concerns by implementing measures to ensure content authenticity and integrity.

A clear priority for OpenAI with the launch of Sora is user safety and mitigating risks that arise from generating realistic videos. The implementation of visible watermarks and adherence to metadata standards set forth by the Coalition for Content Provenance and Authenticity (C2PA) reflect a proactive stance on combating misinformation and misuse of AI-generated content. Moreover, the model is engineered to avoid outputs that depict damaging or abusive content, highlighting OpenAI’s initiative to create a responsible AI environment.

The launch of Sora signifies a momentous occasion in the world of AI and video generation, offering tools that democratize creativity while remaining cognizant of ethical responsibilities. Its subscription-based model raises questions on accessibility, but the features it provides are designed to spur innovation among content creators. As OpenAI sets the stage for sophisticated AI applications, Sora’s introduction could pave the way for future advancements that further bridge the gap between human creativity and artificial intelligence.

Leave a Reply