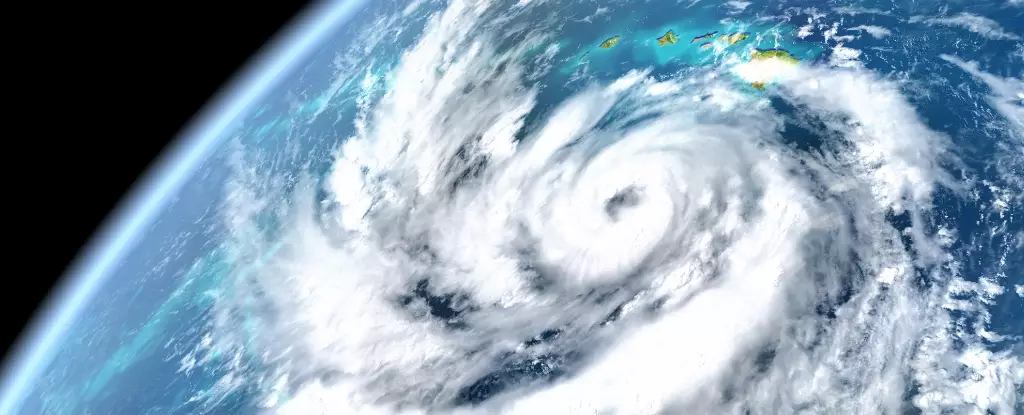

In a groundbreaking leap for meteorological science, Microsoft has introduced an artificial intelligence model named Aurora that is said to surpass existing methods in tracking air quality, weather patterns, and the trajectories of ferocious tropical storms. While its efficacy is supported by research published in the prestigious journal Nature, the implications of this technological advancement stretch beyond mere numbers; they compel us to wrestle with the ethics and reliability of AI in critical sectors. As an individual who leans towards center-left liberalism, my assessment is both hopeful and cautious.

The model, which has not yet hit the commercial market, reportedly excels in generating accurate 10-day forecasts and predicting hurricane paths with breathtaking speed and cost savings. Paris Perdikaris, the senior author and a mechanical engineering expert at the University of Pennsylvania, noted that Aurora achieved a milestone by outperforming operational hurricane forecasting centers for the first time. This claim raises significant questions about the future roles of traditional forecasting agencies and their reliance on established scientific principles.

AI Versus Tradition: A Paradigm Shift

Traditional weather models are grounded in the principles of physics—taking into account conservation of mass, momentum, and energy—requiring immense computational resources that strain even the most advanced technologies. In contrast, Aurora apparently processes historical data with astonishing efficiency, dramatically reducing computational costs to a fraction of what traditional methods incur. It’s easy to get swept away by the allure of such advancements, but we must ask ourselves: is cheaper always better?

The research findings bring to light a crucial ethical dilemma. With the rise in AI-based models, we have to question whether we’re putting too much faith in algorithms that lack emotional intelligence and an understanding of human consequences. While it’s comforting to know that AI can predict within a high degree of accuracy—like forecasting the trajectory of the Doksuri typhoon—it remains to be seen if these models can adequately account for the nuanced complexities of climate systems, many of which are still poorly understood.

Global Impact and Ethical Concerns

These developments arrive at a pivotal time as we grapple with climate change’s devastating consequences. Unlike the current forecasting methods that have matured over decades, AI models like Aurora represent not just a shift in technology, but a potential alteration in policy-making and emergency preparedness strategies. Local governments often rely on these predictions to mobilize resources and implement public safety protocols. A miscalculation stemming from AI could have catastrophic implications.

Interestingly, this isn’t the first of its kind. Huawei’s Pangu-Weather model also entered the fray earlier in 2023, suggesting that a heated competition is on the horizon. As various institutions and countries develop their AI models, the race to claim superiority complicates the narrative surrounding ethical standards and accuracy in weather prediction. Should we not establish a consortium of guidelines to ensure these technologies serve the public interest rather than economic gain?

The Economist’s Responsibility: Scrutinizing AI Predictions

Elements of skepticism about these transformative technologies are warranted. Weather agencies, including the European Centre for Medium-Range Weather Forecasts (ECMWF), are understandably wary, particularly as they venture into their own AI-influenced models. Florence Rabier, the Director General of the ECMWF, emphasizes the need for accuracy while acknowledging the cost-effectiveness of their “learning model.” This tension between cost savings and the potential for negligence is not just a bureaucratic concern but a moral one.

While Aurora may have bested previous methods born out of laborious, time-tested approaches, one must consider if these algorithms are subjected to the same rigors as their human-driven predecessors. It’s imperative that we maintain a vigilant eye on AI’s performance as it interacts with the unpredictable tapestry of environmental disruptions. We can only hope that as societies inch closer to adopting sophisticated AI models, the human component in forecasting—empathy, situational awareness, and ethical responsibility—remains front and center in determining our approach to navigating nature’s fury.

Leave a Reply