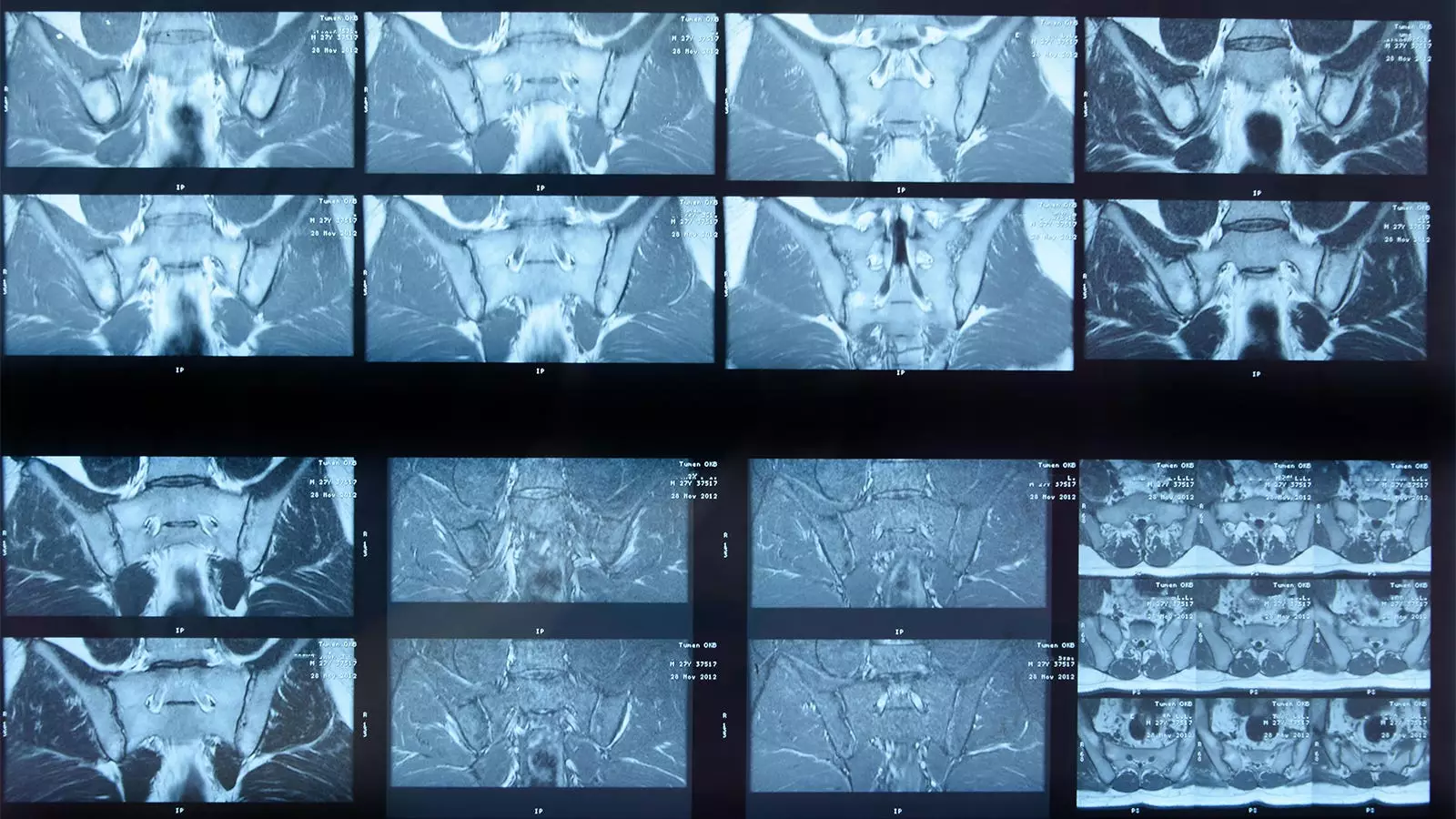

The proliferation of artificial intelligence (AI) in healthcare represents a significant shift in diagnostic techniques, particularly in the realm of radiology. Among various applications, deep learning algorithms are heralded for their potential to analyze complex medical images such as MRI scans. A recent study has focused on the accuracy of an AI algorithm in detecting inflammation in the sacroiliac joint (SIJ) among patients diagnosed with axial spondyloarthritis (axSpA). While the results suggest that this algorithm may serve as a useful tool, there are critical caveats to consider regarding its reliability, clinical applicability, and potential implications within the medical community.

The Study’s Findings: An Overview of Performance

Conducted by Joeri Nicolaes, PhD, and a team of researchers from UCB Pharma in Belgium, the study aimed to validate the performance of an AI algorithm that identifies inflammation in SIJs, a key indicator in diagnosing axSpA. This evaluation involved comparing the algorithm’s outputs against a “gold standard” panel of three expert human readers tasked with analyzing a cohort of 731 MRI scans. The algorithm demonstrated an overall agreement rate of 74% with these expert assessments, which breaks down into specifics like sensitivity (70%), specificity (81%), positive predictive value (84%), and negative predictive value (64%).

However, these findings prompt a deeper analysis. While the agreement numbers might appear promising, they raise questions regarding the algorithm’s ability to substitute human intuition and clinical expertise. The scenario in which the human readers identified inflammation that the algorithm missed (132 cases) highlights a significant gap in performance that could have serious ramifications for patient care.

Statistical metrics used to evaluate the algorithm’s performance are a mix of encouraging and concerning results. The sensitivity and specificity values indicate that while the algorithm performs relatively well in certain aspects, it falls short in others. A sensitivity of 70% may suggest that one-third of actual inflammation cases go undetected by the AI, posing a risk for misdiagnosis or delayed treatment. In contrast, a specificity of 81% means that there remains a notable percentage of false positives—images flagged as inflamed when they are not—creating potential unnecessary strain on patients and healthcare resources.

Interestingly, the study acknowledges that the gold standard panel of expert readers was likely more skilled than typical practitioners, such as general radiologists or rheumatologists. This raises the question of whether the AI’s performance may degrade further in real-world clinical settings where expertise varies.

Contextual Nuances and Limitations of AI in Clinical Practice

One of the key limitations of the AI algorithm is its reliance on specific criteria for identifying inflammation, which may not encompass the full clinical picture. Notably, the study employed conservative definitions for inflammation, which might skew the algorithm’s ability to detect subtler forms of disease manifestation. Furthermore, the researchers enlisted expert opinion that took into account additional clinical factors, such as C-reactive protein levels and HLA-B27 status, when evaluating cases—contextual factors that an algorithm may overlook.

Moreover, an important caveat was highlighted: the algorithm could not process a significant number of cases (129 from C-OPTIMISE and eight from RAPID-axSpA) due to image size or quality issues. This limitation raises concerns about the generalizability of the AI solution, particularly when the real-world patient population is more diverse and less consistently aligned with defined image specifications. Issues surrounding the algorithm’s inability to identify structural damage further amplify doubts about its clinical utility as a standalone diagnostic tool.

As the healthcare community increasingly incorporates AI technologies, acknowledging the strengths and weaknesses of these tools is crucial. The study by Nicolaes and colleagues concludes with cautious optimism about the algorithm’s capabilities, indicating that while adequate metrics for inflammation detection have been achieved, the nuances indicate that it cannot replace expert judgment.

Moving forward, continuous improvement of AI algorithms must align with shifts in clinical classification criteria, alongside efforts to enhance sensitivity and reduce false positive rates. Future iterations should incorporate structural damage identification capabilities and adapt to the changing landscape of diagnostic requirements in axSpA.

Ultimately, while the prospect of AI in MRI analysis presents exciting opportunities for improving diagnostic accuracy and efficiency in patient care, its limitations underscore the importance of maintaining a collaborative approach that leverages both artificial intelligence and the irreplaceable expertise of human practitioners.

Leave a Reply